Normal gradient descent can get caught at an area bare minimum rather then a global least, causing a subpar network. In ordinary gradient descent, we just take all our rows and plug them in to the exact neural network, take a look at the weights, and afterwards regulate them.Quantum computing Working experience quantum impression nowadays with the

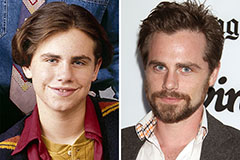

Rider Strong Then & Now!

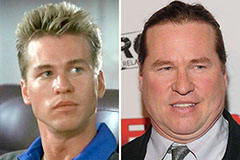

Rider Strong Then & Now! Val Kilmer Then & Now!

Val Kilmer Then & Now! Nancy Kerrigan Then & Now!

Nancy Kerrigan Then & Now! Catherine Bach Then & Now!

Catherine Bach Then & Now! Peter Billingsley Then & Now!

Peter Billingsley Then & Now!